Text Classification Techniques: Bridging the Gap Between Words and Meaning

In the vast landscape of Natural Language Processing (NLP), text classification stands as a pivotal task that fuels a wide range of applications. From sentiment analysis to spam detection, and topic categorization to language identification, text classification plays a crucial role in making sense of unstructured text data. In this blog, we will explore various text classification techniques, ranging from traditional machine learning algorithms to cutting-edge deep learning models, and understand how they bridge the gap between words and meaning.

1. Traditional Machine Learning Algorithms

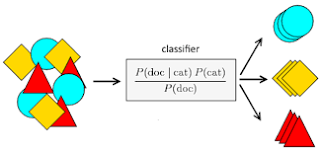

a) Naive Bayes:

|

| Naive Bayes classifier |

In the vast realm of Natural Language Processing (NLP) and text classification, Naive Bayes emerges as a stalwart algorithm, armed with the elegance of Bayes' theorem and the essence of simplicity. Although straightforward in its approach, Naive Bayes has proven time and again to be a powerful and effective tool for various text classification tasks. Let's delve into the intricacies of this probabilistic algorithm, including its architecture, and understand why it shines in sentiment analysis and spam detection.

Bayes' Theorem at the Core

At the heart of Naive Bayes lies Bayes' theorem, a fundamental concept in probability theory. This theorem enables the algorithm to make predictions based on prior knowledge and evidence from new data. In the context of text classification, Naive Bayes leverages Bayes' theorem to calculate the probability of a document belonging to a particular class, given its features (words).

Architecture and Probabilistic Model

The architecture of Naive Bayes is relatively simple, as it comprises three main components:

1. Feature Space: The feature space represents the words or features extracted from the text data. Each document is represented as a vector of features, where each feature corresponds to a word or term in the document.

2. Training Phase: During the training phase, Naive Bayes estimates the prior probabilities of each class and calculates the likelihoods of each feature (word) occurring in each class. These probabilities are based on the frequency of words in the training data for each class.

3. Classification Phase: In the classification phase, given a new document, Naive Bayes calculates the posterior probability of the document belonging to each class using Bayes' theorem and the likelihoods obtained during training. The document is then assigned to the class with the highest posterior probability.

Assumption of Feature Independence

The "naive" aspect of Naive Bayes stems from its assumption of feature independence, which simplifies the calculations significantly. This assumption posits that the presence of a particular word in a document is independent of the presence of other words. In reality, this assumption may not always hold true, as words in a sentence are often interconnected and contextually related. However, despite this simplification, Naive Bayes often performs remarkably well in practice.

Applications in Sentiment Analysis and Spam Detection

Naive Bayes finds practical applications in sentiment analysis, where the goal is to determine the sentiment or emotional tone of a piece of text (e.g., positive, negative, or neutral). The algorithm can classify text documents based on the occurrence of specific words and their association with sentiments.

Furthermore, Naive Bayes is highly effective in spam detection, a task that involves distinguishing between legitimate and unwanted emails. By learning from a labeled dataset of spam and non-spam emails, Naive Bayes can identify spam patterns and classify new emails accordingly.

The Elegance of Simplicity

Despite its seemingly "naive" assumptions, Naive Bayes continues to thrive in the world of text classification. Its simplicity, speed, and ability to handle high-dimensional data make it a preferred choice for certain applications. Additionally, Naive Bayes can serve as a strong baseline for more complex algorithms, providing valuable insights into the underlying data distribution and the nature of the classification problem.

b) Support Vector Machines (SVM):

In the vast landscape of text classification, Support Vector Machines (SVM) stands as a powerful and versatile supervised learning algorithm, ready to tackle the complexities of language with utmost precision. With its unique approach of finding optimal hyperplanes, SVM has proven to be a formidable contender in various text classification tasks, delivering high accuracy and reliable results. Let's delve into the architecture and workings of SVM to comprehend its prowess in the realm of NLP.

The Architecture of Support Vector Machines

| |

|

At its core, SVM is a binary classification algorithm, designed to divide data points into two distinct classes by finding the most suitable hyperplane that best separates them. In the context of text classification, SVM assigns class labels to text documents based on the occurrence and frequency of specific words or features in the document.

Finding the Optimal Hyperplane

In SVM, the "optimal" hyperplane refers to the hyperplane that maximizes the margin between the two classes of data points. The margin is the distance between the hyperplane and the closest data points from each class. SVM aims to find the hyperplane that has the largest margin, as it is more likely to generalize well to unseen data.

Text Representation: A Crucial Component

To apply SVM to text classification, an essential step is to represent the text documents in a numerical format that SVM can process. Commonly used text representations include Term Frequency-Inverse Document Frequency (TF-IDF) and word embeddings.

TF-IDF:TF-IDF is a statistical measure that reflects the importance of a word in a document relative to the entire corpus. It takes into account the term frequency (TF), which represents how often a word occurs in a document, and the inverse document frequency (IDF), which represents how unique or rare a word is across the entire corpus. By representing text documents using TF-IDF vectors, SVM can effectively handle high-dimensional text data.

Word Embeddings: Word embeddings, such as Word2Vec or GloVe, convert words into dense vectors in a continuous vector space, capturing semantic relationships between words. SVM can leverage pre-trained word embeddings to enhance its performance in text classification tasks, as the embeddings capture contextual information and meaning of words.

Achieving High Accuracy in Text Classification

With the appropriate text representation and optimal hyperplane, SVM can achieve high accuracy in various text classification tasks. It has demonstrated success in sentiment analysis, topic categorization, document classification, and more.

In the realm of text classification, Logistic Regression emerges as a powerful and widely-used linear model that bridges the gap between words and classes. This versatile algorithm plays a crucial role in binary text classification tasks, where the objective is to categorize documents into two classes. Let's delve into the intricacies of Logistic Regression and explore its architecture and applications in sentiment analysis, text categorization, and beyond.

Logistic Regression Architecture

The architecture of Logistic Regression is rooted in linear regression, a traditional statistical technique used for predicting continuous numerical values. However, unlike linear regression, which outputs continuous values, Logistic Regression transforms the output using a logistic or sigmoid function, yielding probabilities that lie within the range [0, 1]. These probabilities reflect the likelihood of a document belonging to a specific class, with 0 indicating the absence of the class and 1 indicating its presence.

|

Flowchart of logistic regression |

In text classification, the feature space represents the words or terms extracted from the documents. Each document is represented as a vector of features, where each feature corresponds to a word or term present in the document. The coefficients of the logistic regression model are learned during the training phase, and they determine the importance of each feature in the classification process.

Estimating Probabilities for Binary Classification

The logistic or sigmoid function is at the core of Logistic Regression's ability to estimate probabilities for binary classification. The sigmoid function maps the output of the linear regression (the dot product of features and their respective coefficients) into a probability value between 0 and 1.

The sigmoid function is defined as:

P(y=1|X) = 1 / (1 + exp(-z))

Where P(y=1|X) is the probability of the document belonging to class 1, X is the feature vector of the document, and z is the dot product of X and the learned coefficients.

Applications in Sentiment Analysis and Text Categorization

Logistic Regression finds widespread use in sentiment analysis, where the goal is to determine the sentiment or emotional tone of a piece of text. By learning from labeled data, the algorithm can classify documents as positive or negative, reflecting the sentiment of the content.

Additionally, Logistic Regression is employed in text categorization tasks, where documents are classified into predefined categories or topics. Whether it's classifying news articles into different topics or detecting spam emails, Logistic Regression's simplicity and effectiveness make it an invaluable tool in various text categorization applications.

The Power of Simplicity and Interpretability

The simplicity and interpretability of Logistic Regression are among its most significant strengths. The straightforward mathematical foundation and the ability to estimate probabilities make it easy to understand how the model arrives at its classification decisions. Furthermore, the interpretability of coefficients allows us to identify the most influential words or features contributing to the classification.

2. Word Embeddings

a) Word2Vec:

In the realm of Natural Language Processing (NLP) and text classification, Word2Vec stands as a revolutionary word embedding technique that bridges the gap between raw text and meaningful representations. By capturing the intricate semantic relationships between words, Word2Vec empowers text classification models to discern the context and essence of language, ushering in a new era of language understanding. Let's delve into the architecture and workings of Word2Vec and discover how it enhances the performance of text classification models.

At the core of Word2Vec lies the concept of continuous word embeddings. Instead of representing words as sparse and discrete symbols, Word2Vec transforms them into dense vectors residing in a continuous space. This embedding space is carefully crafted to reflect the semantic similarity between words. Words with similar meanings or contextual usage are positioned closer to each other, enabling the model to comprehend their relatedness.

Context and Meaning in Word Representations

Word2Vec operates on the premise that a word's meaning is profoundly influenced by the context in which it appears. To capture this context, Word2Vec employs two main architectures: Continuous Bag of Words (CBOW) and Skip-gram.

.png) |

| CBOW and skip gram |

Continuous Bag of Words (CBOW): CBOW predicts a target word based on its neighboring words in a sentence. By leveraging the context words, the model learns to create dense embeddings that encode the essence of the word's context.

Skip-gram: In contrast, Skip-gram takes a target word and attempts to predict its neighboring words. This architecture excels in capturing word-to-word relationships and is particularly effective when dealing with rare words or phrases.

Enhancing Text Classification Models

Word2Vec's ability to capture the semantic relationships between words significantly enhances the performance of text classification models. By representing words in dense vector spaces, Word2Vec transcends the limitations of traditional sparse representations and enables classifiers to leverage the rich contextual information of language.

Pre-trained Word2Vec embeddings are particularly valuable, as they encapsulate semantic knowledge gleaned from vast text corpora. By utilizing these embeddings as features, traditional machine learning algorithms gain access to the latent semantic relationships between words, leading to more robust and accurate text classification.

3. Deep Learning Models

a) Convolutional Neural Networks (CNN):

Convolutional Neural Networks (CNNs), renowned for their exceptional performance in computer vision tasks, have also made significant strides in text classification. By leveraging their unique architecture, CNNs have demonstrated their effectiveness in deciphering the meaning behind textual data. Let's explore the inner workings of text CNNs and understand how they capture local patterns, making them particularly well-suited for tasks like sentiment analysis and document classification.

The Essence of CNNs in Computer Vision

Before delving into text CNNs, let's briefly grasp the essence of CNNs in computer vision. In image processing, CNNs excel at detecting features like edges, shapes, and textures through the use of filters (also known as kernels). These filters slide across the image, convolving with various regions and highlighting distinctive patterns. Through this process, CNNs can recognize objects, faces, and intricate details within images.

.png) |

| CNN in Computer Vision |

Text CNNs: Adapting CNNs to NLP

Text CNNs bring the power of convolutional neural networks to the domain of Natural Language Processing. In contrast to images, text is a sequence of words, where the order of words carries crucial semantic information. To adapt CNNs for text classification, the architecture is tweaked to handle sequential data.

Filtering Sequences of Words

In text CNNs, filters (kernels) are applied to sequences of words instead of image regions. The filters slide over the text, capturing local patterns that represent important linguistic structures. These local patterns could be combinations of words, word pairs, or phrases that hold significant meaning within the context of the text.

Local Context and Semantic Understanding

The utilization of filters enables text CNNs to grasp the local context of words, thereby understanding the intricate relationships between neighboring words. By capturing local patterns, text CNNs become adept at recognizing specific linguistic elements that influence the overall meaning of the text.

Application in Sentiment Analysis and Document Classification

Text CNNs have found substantial success in sentiment analysis and document classification tasks. Sentiment analysis involves determining the sentiment (positive, negative, or neutral) expressed in a piece of text. Text CNNs can discern local patterns indicative of specific sentiments, enabling accurate sentiment classification.

Similarly, in document classification, text CNNs can identify local patterns and linguistic cues that reveal the nature of the document, allowing for precise categorization into relevant classes or topics.

The Advantages of Local Patterns

One of the key advantages of text CNNs lies in their ability to focus on local patterns, allowing them to handle long-range dependencies and contextual variations within the text. This characteristic makes them particularly valuable in scenarios where understanding the local semantics is paramount.

b) Recurrent Neural Networks (RNN):

In the realm of Natural Language Processing (NLP) and text classification, Recurrent Neural Networks (RNNs) stand as a powerful class of algorithms specifically designed to handle sequential data, making them well-suited for tasks involving language understanding. RNNs are capable of capturing the sequential nature of text, allowing them to unravel the intricacies of sentences and comprehend long-range dependencies within the context. However, traditional RNNs face challenges such as the vanishing gradient problem, which can hinder their performance. To overcome these limitations, variations like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) have been introduced, becoming prominent choices for text classification. Let's delve into the architecture and workings of RNNs to gain a deeper understanding of their role in language processing.

Architecture and Sequential Data Handling

The architecture of an RNN is structured to process sequential data, making it ideal for text classification tasks. Unlike traditional feedforward neural networks, RNNs have loops that allow information to persist across time steps. This looping mechanism enables RNNs to take into account the entire sequence of words in a sentence, retaining a memory of the past words as it processes the current one.

.png) |

| Architecture and Sequential Data Handling |

Capturing Long-Range Dependencies

One of the primary strengths of RNNs lies in their ability to capture long-range dependencies in sentences. In language, the meaning of a word can be heavily influenced by its context and the words that came before it. RNNs can model these dependencies and understand the sentence structure in a way that traditional algorithms may struggle to achieve.

.png) |

| Long-Range Dependencies |

The Vanishing Gradient Problem

However, traditional RNNs face a challenge known as the vanishing gradient problem. As the network backpropagates through time during training, the gradients of earlier time steps may become infinitesimally small, making it difficult to update the weights effectively and learn from long-range dependencies. This limitation can hinder the ability of traditional RNNs to effectively capture long-term dependencies in text.

.png) |

| vanishing Gradient Descent |

Enter LSTM and GRU

To understand the architectural advancements of LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) in addressing the vanishing gradient problem, let's explore how these specialized variations of RNNs revolutionized the field of Natural Language Processing (NLP) and other sequential data tasks.

The Vanishing Gradient Problem in RNNs

Traditional RNNs were initially designed to process sequential data by maintaining a hidden state that captures information from previous time steps. However, during backpropagation, when updating the model parameters based on the gradient of the loss function, RNNs encountered a significant challenge known as the vanishing gradient problem.

The vanishing gradient problem arises when the gradients of the loss function with respect to the model parameters become extremely small as they propagate back through time. Consequently, the RNN struggles to capture long-range dependencies in the data and may fail to effectively learn from distant past time steps. As a result, the model's ability to retain important information over long sequences is limited, leading to suboptimal performance in tasks like language modeling, sentiment analysis, and machine translation.

LSTM and GRU: Introducing Gating Mechanisms

To overcome the vanishing gradient problem, LSTM and GRU were introduced as architectural advancements of RNNs. These variations incorporate specialized gating mechanisms that enable the network to control the flow of information, selectively retaining or forgetting information over time.

1. LSTM (Long Short-Term Memory):

|

LSTM Recurrent Neural Networks |

LSTM introduces three gating mechanisms - the input gate, forget gate, and output gate - that regulate the flow of information in and out of the memory cell. The input gate controls how much new information is added to the cell, the forget gate determines what information to discard from the cell, and the output gate filters the information to be output from the cell. By dynamically controlling the flow of information, LSTM can effectively capture long-term dependencies and retain essential information over extended sequences.

2. GRU (Gated Recurrent Unit):

GRU is a simplified version of LSTM that features two gating mechanisms - the reset gate and update gate. The reset gate determines how much of the past hidden state to forget, and the update gate controls how much of the new hidden state to retain. GRU is computationally more efficient compared to LSTM and is known for its simplicity while still offering competitive performance in various NLP tasks.

.png) |

| Gated Recurrent Unit |

Mitigating the Vanishing Gradient Issue

By incorporating gating mechanisms, LSTM and GRU models effectively mitigate the vanishing gradient problem in RNNs. These architectures allow the network to regulate the flow of gradients through time, facilitating the propagation of meaningful information over longer sequences. This capability enhances the model's ability to capture dependencies and long-term patterns in sequential data, making them particularly well-suited for processing natural language, time series data, and other sequential information.

Role in Text Classification

RNNs, especially LSTM and GRU, have become prominent choices for text classification tasks due to their ability to process sequential data and capture long-range dependencies. Their effectiveness is evident in applications such as sentiment analysis, document classification, and language translation, where understanding the context and sequence of words is crucial for accurate predictions.

c) Understanding the Transformer Revolution: Unleashing the Power of BERT, GPT-3, and RoBERTa in NLP:

The landscape of Natural Language Processing (NLP) has witnessed a seismic shift with the advent of transformers, and their impact has been nothing short of revolutionary. Models like BERT (Bidirectional Encoder Representations from Transformers), GPT-3 (Generative Pre-trained Transformer 3), and RoBERTa (A Robustly Optimized BERT Pretraining Approach) have reshaped the way we approach NLP and text classification. Let's unravel the intricacies of these transformers and explore how they have ushered in a new era of language understanding.

The Power of Attention Mechanisms

|

| Attention mechanism |

At the core of transformers lies the revolutionary attention mechanism. Unlike traditional sequence-to-sequence models, which process words sequentially, transformers utilize self-attention to capture contextual relationships between words in a sentence. This allows the model to weigh the importance of each word based on its relevance to other words in the sentence, enabling a more comprehensive understanding of the entire text.

Bidirectional Contextual Information

|

The Bidirectional Language Model. |

One of the key advancements of transformers is their ability to capture bidirectional contextual information. Traditional models, like LSTMs, process sentences sequentially, which limits their understanding of words in the context of both preceding and subsequent words. In contrast, transformers consider all words in the sentence simultaneously, allowing them to create a contextualized representation for each word based on its entire surrounding context.

The Rise of Pre-trained Models

BERT, GPT-3, and RoBERTa have set new benchmarks in NLP by leveraging the power of pre-training. These models are initially trained on large, diverse datasets to learn the rich linguistic patterns and nuances present in the language. The pre-training process involves predicting masked words (BERT) or generating text (GPT-3) in a contextually aware manner. As a result, these models develop a deep understanding of language, making them highly effective in various NLP tasks.

Fine-Tuning for Specific Tasks

While pre-training gives transformers a strong foundation in language understanding, fine-tuning is the crucial step that tailors these models to specific tasks, such as text classification. During fine-tuning, transformers are further trained on task-specific datasets, allowing them to adapt their knowledge to the nuances of the particular task at hand. Fine-tuning pre-trained transformers has become the go-to approach for achieving state-of-the-art performance in text classification tasks.

Applications and Impact

Transformers have found extensive applications in a wide range of NLP tasks, including sentiment analysis, named entity recognition, machine translation, and question-answering, among others. Their ability to handle long-range dependencies, capture context, and generalize well to various tasks has made them indispensable tools in modern NLP research and industry applications.

A Paradigm Shift in NLP

The rise of transformers, exemplified by BERT, GPT-3, and RoBERTa, marks a paradigm shift in NLP. Their attention mechanisms, bidirectional context modeling, and pre-training capabilities have pushed the boundaries of language understanding. As these models continue to evolve and larger datasets become available, transformers are poised to drive further breakthroughs in NLP and reshape the way we interact with, comprehend, and process language.

Conclusion:

In conclusion, the field of Natural Language Processing (NLP) has come a long way, and much of its progress can be attributed to the availability of robust libraries, frameworks, and techniques for text classification. From traditional machine learning algorithms like Naive Bayes, SVM, and Logistic Regression to deep learning models such as CNNs, RNNs, and transformer-based models like BERT, GPT-3, and RoBERTa, each approach has its unique strengths and contributions to the world of language understanding.

Transformers, in particular, have revolutionized NLP and text classification with their attention mechanisms, bidirectional context modeling, and fine-tuning approaches. They have empowered researchers and practitioners to achieve unprecedented accuracy and performance in various language tasks, and the promise of their future capabilities continues to excite the NLP community.

Text classification techniques, whether based on traditional machine learning or deep learning, play a pivotal role in extracting valuable insights from vast amounts of textual data. The choice of technique depends on the specific task, available data, and computational resources, making NLP a diverse and evolving field with immense promise for groundbreaking research and transformative applications.

As we continue to explore the capabilities of text classification techniques, we bridge the gap between the raw words and the meaningful information they carry, unlocking the true potential of language understanding. Whether it's sentiment analysis, spam detection, topic categorization, or any other NLP task, the wealth of tools and algorithms available empowers us to harness the power of language and transform the way we interact with and comprehend textual data.

In this era of unprecedented advancements, RNNs, CNNs, SVM, Logistic Regression, Naive Bayes, and transformers all stand as integral components, collectively enriching the landscape of Natural Language Processing and driving us towards deeper insights into the complexities of human language. As NLP continues to evolve, the future holds tremendous promise, and with these powerful techniques at our disposal, the possibilities for innovative research and transformative applications are truly limitless. Let's embrace the potential of NLP and embark on an exciting journey to unlock the true essence of natural language. Happy exploring! 🌐💡🚀

#NLP #TextClassification #MachineLearning #DeepLearning #Transformers #BERT #GPT3 #RoBERTa #SentimentAnalysis #SpamDetection #LanguageUnderstanding #AI #DataScience #FutureOfNLP #TextProcessing

.png)

.png)

Comments

Post a Comment

If you have any queries. Let me know